Hey there.

It's been an absolutely insane week in bug bounty. Lots of drama about AI + platforms, and most platforms have now spoken out. I've noticed a ton of chatter and speculation without real facts, so I rounded up all the official statements I could find and put them in the Updates section. I encourage you to read through those and make your own opinions rather than just echoing what others are saying. If I missed something, please let me know by replying to this email.

ICYMI, Ariel Garcia left HackerOne this week, who is my partner with Bug Bounty Village and a close friend I’ve had the pleasure to work with at HackerOne these last few years. In case you’re reading this: I’m going to greatly miss working with you daily on H1 stuff, but I know we’ll still have plenty of things to collab together on with BBV. Much love to you, my friend.

Personally, it's been a tough week with everything going on, so I'm making the most of this three-day weekend (in the US at least). Hope you all have a great week, and happy hunting.

Let's dive in.

I’m available for 1:1 calls if you want to chat about bug bounty, career growth, community building, or anything else you think I can help with. You can book time with me here.

PhoneLeak: Exfiltrating Gemini Data Over a Phone Call [Blog]

by Starstrike AI (@StarstrikeAI)

Starstrike details a Gemini data-exfiltration chain demonstrated at Bugswat Tokyo (April 2025), splitting the attack into payload delivery and out-of-band exfiltration. The core idea uses indirect prompt injection to induce the model to encode and leak model-accessible data over an audio/telephony channel, highlighting a non-HTTP path defenders often miss.

Open-Source LLM Tool Claims to Predict CVEs Before Publication [𝕏 Tweet]

by Eugene Lim (@spaceraccoonsec)

Eugene Lim shared an open-source, LLM-assisted pipeline (packaged as a GitHub Action) that aims to flag likely CVEs before they’re published.

Have something you want to Spotlight? Tell me.

HackerOne: GenAI Training on Submissions Not Allowed [𝕏 Tweet]

by Alex Rice (@senorarroz)

Alex Rice shared HackerOne’s clarification that researcher submissions cannot be used to train GenAI models, pointing to updated documentation. The note also acknowledges that “classic” ML use has existed under prior terms and that further wording updates are planned.

Intigriti CEO Statement on AI and Researcher IP [𝕏 Tweet]

by Intigriti

Intigriti published a platform statement on AI usage and researcher intellectual property, outlining expectations for handling submissions and related data. It’s a useful reference point amid broader industry discussion about AI feature rollouts and researcher rights.

YesWeHack Statement on AI Features and Researcher Rights [𝕏 Tweet]

by YesWeHack

YesWeHack outlined an AI feature rollout positioned around automating repetitive security tasks while keeping humans in the loop for critical decisions. The announcement also emphasizes customer-level controls over enabling and using these capabilities.

Report: HackerOne Reduces Bounties, Community Q&A Planned [𝕏 Tweet]

by Critical Thinking Podcast

Critical Thinking Podcast reported recent HackerOne bounty reductions and said a community Q&A with H1 is planned for next week. The thread asks hunters to submit questions to help surface specifics and downstream impact.

H1-3120: Salesforce Live Hacking Event Recap [🎥 Video]

by HackerOne

This recap video follows HackerOne’s H1-3120 live hacking event with Salesforce, focused on adversarial testing of AI systems in production-like environments. It highlights hands-on workflows, emerging LLM attack patterns, and coordination between external researchers and internal security teams.

Hacker spotlight: sw33tLie, bsysop, and godiego [📓 Blog]

by Bugcrowd

Bugcrowd spotlights a trio of researchers who demonstrate how team-driven hacking uncovers weaknesses faster and from more angles; the linked profile shows their methods and teamwork.

Nullcon Goa 2026: Adobe Live Bug Hunting (AI Targets) [📓 Blog]

by NULLCON

Nullcon announced an Adobe live bug hunting event (Feb 28–Mar 1, 2026) focused on AI issues mapped to the OWASP Top 10 for LLMs. The page covers scope (Adobe Express/Acrobat AI assistants), submission requirements via HackerOne, rewards, and rules of engagement.

Did I miss an important update? Tell me.

Five Burp Extensions Commonly Used for Web Testing [𝕏 Tweet]

by Behi

This tweet highlights a short list of Burp Suite extensions frequently used in real-world testing, including Request Smuggler, Turbo Intruder, Param Miner, and Autorize. It’s a compact tooling stack for speeding up coverage across request smuggling, parameter discovery, and authorization checks.

XSS Cheat Sheet Adds Geolocation-Based Vectors [𝕏 Tweet]

by Gareth Heyes (@garethheyes)

Gareth Heyes noted new geolocation-themed XSS payload ideas added to the XSS cheat sheet, with additional context in the replies and credit to @AmirMSafari. It’s a niche but useful payload source when geolocation APIs or permission prompts are part of a flow.

burp-ai-agent v0.2.0 Adds Saved Chats and Markdown Export [𝕏 Tweet]

by Six2dez (@Six2dez1)

Six2dez released burp-ai-agent v0.2.0 with per-project chat persistence, a refreshed UI, and Markdown export for sharing or reporting. The update also tweaks input/keybindings to make AI-assisted Burp sessions less clunky during active testing.

Have a favorite tool? Tell me.

Reported RCE in Google’s Antigravity AI Code Editor ($10k) [𝕏 Tweet]

by Hacktron AI (@HacktronAI)

HacktronAI claims a remote code execution issue in Google’s Antigravity AI code editor that reportedly earned a $10,000 bounty.

Caido Advisory: DNS Rebinding Bypass Leading to RCE [📓 Blog]

by Caido

Caido’s advisory describes a DNS rebinding protection bypass via an injected X-Forwarded-Host header, enabling access to localhost-only endpoints and potential RCE. The issue affects versions prior to 0.55.0, is fixed in 0.55.0, and includes mitigations such as disabling Guest mode.

A Single-Typo SpiderMonkey Wasm GC Bug to Firefox RCE [📓 Blog]

by kqx (@kqx_io)

kqx breaks down a SpiderMonkey Wasm GC bug caused by a one-character typo that corrupted forwarding-pointer tagging and enabled type confusion between inline and out-of-line arrays. The write-up walks through a practical exploit strategy (GC triggering, heap churn/sprays) to reach arbitrary read/write and eventual RCE, with PoCs and crash traces.

Samsung MagicINFO 9 Server: Pre-Auth RCE Deep Dive (Part 2) [📓 Blog]

by sourceincite (@sourceincite)

sourceincite continues a deep technical teardown of pre-auth RCE paths in Samsung MagicINFO 9 Server, mapping service dispatch and manager class selection via database tables. It covers a traversal/auth bypass and a TOCTOU-driven upload-to-RCE chain (CVE-2025-54446), including patch analysis and exploit constraints.

Unauthenticated Chat Session Takeover in an AI Chatbot [📓 Blog]

by Whit Taylor (@un1tycyb3r)

Whit Taylor describes taking over other users’ chatbot conversations by connecting to a WebSocket session using only a conversation UUID, with no meaningful authz checks. The post details the message format and impact across customer deployments, and notes the vendor quickly disabled the affected endpoint after disclosure.

Trailing Danger: HTTP Trailer Parsing Discrepancies and Request Smuggling [📓 Blog]

by sebsrt (@s3bsrt)

sebsrt explores how inconsistent handling of HTTP trailer fields can lead to header injection and a request-smuggling class dubbed Trailer Merge (TR.MRG). The post compares behavior across HTTP/1.1, HTTP/2, and HTTP/3, and shows practical exploitation when implementations merge trailers into headers post-dechunking (with lighttpd 1.4.80 as a concrete example).

Did I miss something? Tell me.

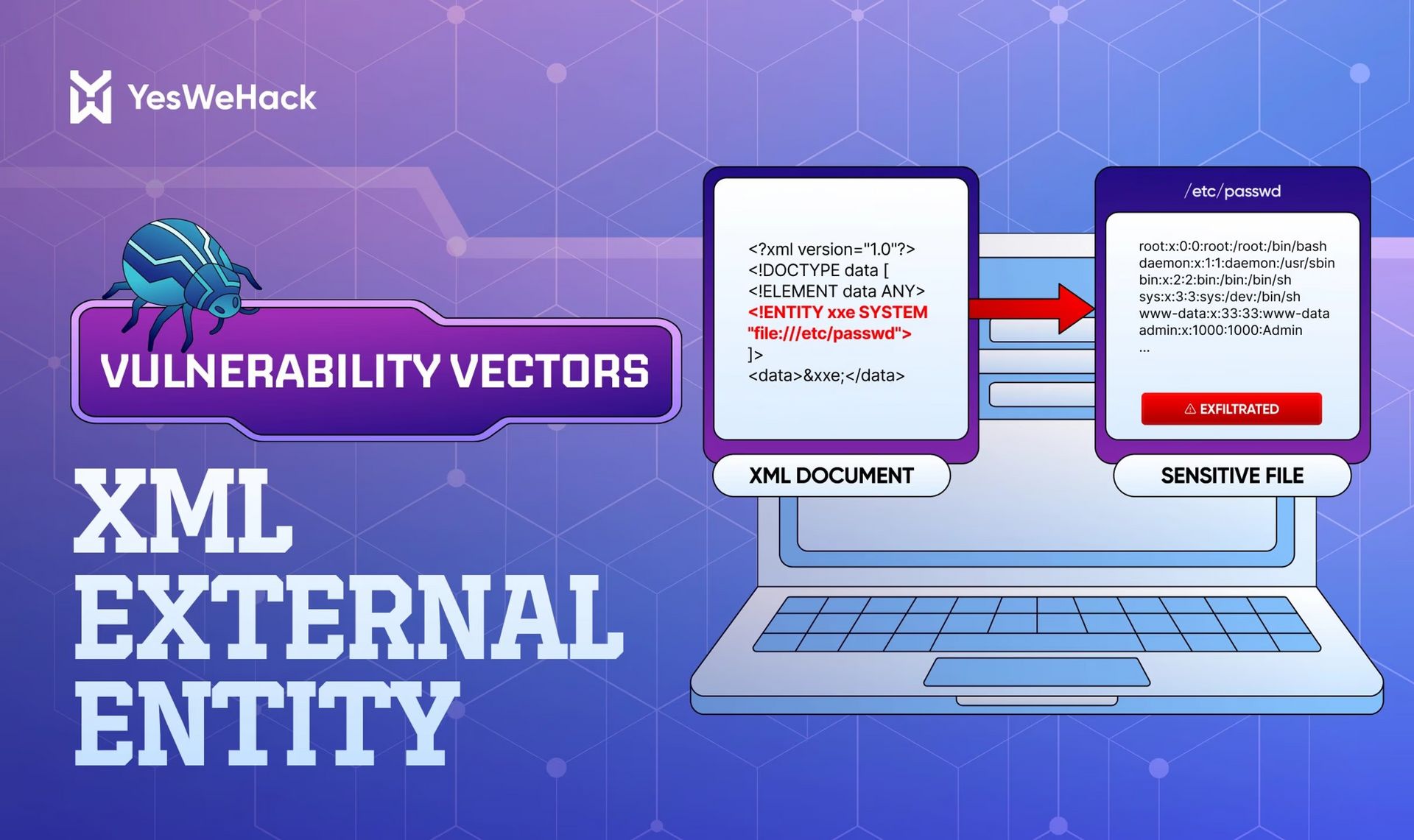

Exploiting XXE: A Practical Bug Bounty Guide [📓 Blog]

by YesWeHack

YesWeHack’s XXE guide surveys common XML entry points (including document formats like DOCX/SVG and SOAP-style APIs) and covers file-read, SSRF, and blind/OOB exfiltration techniques. It includes worked payload examples using external DTDs and error-based strategies, plus pointers to DTD tooling to speed up target-specific payload building.

MCP Security Risks: OAuth and Dynamic Client Registration Pitfalls [📓 Blog]

by Voorivex Team (@voorivexAcademy)

Voorivex analyzes attack paths in MCP (Model Context Protocol) servers acting as AI connectors, focusing on misconfigured Dynamic Client Registration and OAuth flows. The post shows how these mistakes can chain into XSS and escalate into SSRF-style access against internal endpoints in AI integration setups.

Using the Browser Console to Spot Additional Web Bugs [𝕏 Tweet]

by Intigriti

Intigriti shared a thread of practical browser console techniques that help uncover issues that don’t show up in the UI, including hints from runtime errors, network call patterns, and client-side state. It’s a quick refresher on using DevTools as a primary signal source during manual testing.

Four Core Concepts for Hunting Web Cache Poisoning (WCP) [𝕏 Tweet]

by Bugcrowd

Bugcrowd linked a beginner-oriented overview of foundational concepts behind web cache poisoning, aimed at helping new hunters reason about cache keys and unkeyed inputs. It’s a lightweight primer before moving into variant discovery and exploit chaining.

Android Bug Bounty Workflow: A Quick Starting Point [𝕏 Tweet]

by YesWeHack

YesWeHack shared a short workflow for getting traction on Android bounty targets, focusing on initial setup and early-stage testing checkpoints. It’s oriented toward structuring the first pass rather than deep-dive exploitation.

Azure DevOps Self-Hosted Agent Interception [📓 Blog]

by Critical Thinking Podcast

This write-up demonstrates an end-to-end attack against Azure DevOps self-hosted agents by recovering DPAPI-protected credentials, forging a JWT to obtain tokens, and hijacking the agent session to decrypt pipeline messages. It includes reproducible PowerShell steps and highlights how default filesystem permissions on agent directories can turn a VM foothold into full pipeline/secret compromise.

Automated LLM Red Teaming at Scale With promptfoo [📓 Blog]

by NVISO Labs (@NVISO_Labs)

NVISO walks through building repeatable LLM security test suites with promptfoo, using a deliberately vulnerable Chainlit app as the target. It covers prompt-injection and data leakage test design, scoring, and CI-style automation to move LLM red teaming from ad-hoc probing to continuous coverage.

Did I miss something? Tell me.

DTMF Exfiltration, CSRF in Iframes, and Cross-Consumer Attacks [🎥 Video]

by Critical Thinking Podcast (@criticalthinkingpodcast)

This episode mixes bug bounty news and research with a standout real-world exploit: using tapjacking and DTMF tones to make Gemini exfiltrate a victim’s 2FA code via an automated phone call. It also covers a practical CSRF insight about iframes, trends in AI-assisted hacking, and introduces “cross-consumer attacks” as a recurring third-party integration risk pattern.

Token Scope Confusion and Privilege Escalation [🎥 Video]

by Medusa (@medusa_0xf)

Medusa explains how scope generation/validation bugs can produce cross-client privilege escalation when multiple clients share auth flows. The video walks through a PoC with API traffic showing how a low-privileged session can become admin-capable due to inconsistent scope enforcement.

Manual Bug Hunting: Recon, JavaScript Review, and Common Vuln Classes [🎥 Video]

by JakSec

This long-form guide covers a feature-driven recon workflow and hands-on JavaScript analysis, with demos of tools like JXScout and Autorize. It also walks through real bug patterns (IDOR, DOM XSS, postMessage issues, header leaks) with practical testing steps.

Three Practical ChatGPT Techniques for Bug Bounty Work [🎥 Video]

by Harrison Richardson (@rs0n)

Harrison Richardson demonstrates three ways to use ChatGPT to accelerate common bounty tasks, including recon support, payload/template drafting, and report writing. The video stresses validating outputs rather than treating AI suggestions as ground truth, and includes some promotion for the creator’s paid community.

Common Primitives Behind Game Hacking [🎥 Video]

by DeadOverflow (deadoverflow)

DeadOverflow surveys the core primitives that show up across game exploitation, from memory scanning/editing to code injection and network interception. It also touches on anti-cheat realities and where client-server trust boundaries tend to break down in practice.

WAF/Firewall Bypass Techniques Seen in Bug Bounty [🎥 Video]

by zack0x01

This video covers practical WAF and network-filter evasion tactics, including payload mutation/encoding, header manipulation, and iterating delivery vectors in Burp. It’s geared toward adapting to signature-based defenses while validating behavior changes at the application layer.

Did I miss something? Tell me.

Broken Access Control Exposes Contact Submission PII [𝕏 Tweet]

by Owl.exe (@datafuel0)

Owl.exe reported an access control failure where an authenticated user could enumerate all contact form submissions via GET /rest/v1/contacts, exposing PII. The post frames it as a critical improper authorization issue (CWE-284).

FastAdmin CVE-2024-7928 Still Getting Mass-Exposed [𝕏 Tweet]

by 0x0smilex

0x0smilex warned that CVE-2024-7928 in FastAdmin remains widely exploitable, with FOFA-indexed instances allegedly exposing credentials without authentication. The impact described is straightforward database takeover for unpatched deployments.

Google Dork Set for Subdomain Takeover Recon [𝕏 Tweet]

by n0aziXss (@n0aziXss)

This tweet links a small collection of Google dorks intended to surface subdomain takeover candidates. It’s a quick recon resource when you want alternate discovery angles beyond certificate logs and DNS history.

Dependency Confusion: A Minimal Takeover Test Checklist [𝕏 Tweet]

by Intigriti

Intigriti shared a short checklist for dependency confusion triage: extract internal package names (repos/sourcemaps), verify registry presence (e.g., npm), and assess takeover risk if names are unclaimed. It’s a concise reminder of the basic workflow before digging into build contexts and package resolution.

Multilingual Prompt Injection Bypasses LLM Safety Filters [𝕏 Tweet]

by Jenish Sojitra (@_jensec)

Jenish Sojitra reported bypassing multiple LLM safety layers (including Azure’s content filtering) by switching prompt-injection payloads into Thai and Arabic. The results suggest uneven multilingual coverage in runtime filtering and policy enforcement.

Content-Type Mismatches That Turn “Safe JSON” Into XSS [𝕏 Tweet]

by Critical Thinking Podcast

Critical Thinking Podcast highlighted a common pitfall where servers treat a response as JSON based on Content-Type, but browsers still render it as HTML under certain conditions. The mismatch can create an XSS path even when backend validation assumes the payload is non-executable.

Did I miss something? Tell me.

Because Disclosure Matters: This newsletter was produced with the assistance of AI. While I strive for accuracy and quality, not all content has been independently vetted or fact-checked. Please allow for a reasonable margin of error. The views expressed are my own and do not reflect those of my employer.